This On-Site SEO checklist does not only include the ranking factor. It also focuses on usability, accessibility, and branding. And some of these may not apply to every site.

On-Site SEO Checklist

1. Meta Tags

- Title Tags – You should include a title with every page on your website. It provides web crawlers with context for indexing and ranking while providing visitors with an insight into your content. Your title should be kept under 50-60 characters. Doing so will keep your titles from being shortened at the top of browsers or tabs where they are normally displayed

- Description Tags – Optimize the description portion of your markup by ensuring there is a maximum length, with spaces, of no more than 155-160 characters. Though the description has no value for search ranking, it adds usability and can either drive traffic to or away from, your website. Anything over that character count can result in your descriptions being truncated, or maybe not even being used within SERPs.

- Geo-Targeting – You can also include Geo-meta tags, which provide context for localized searches. Mobile searches rely heavily upon this metric. Use www.geo-tag.de to automatically generate Geo-meta tags.

Code Example:

<meta name="geo.region" content="US-CA" /> <meta name="geo.placename" content="Glendale" /> <meta name="geo.position" content="34.151808;-118.24637" /> <meta name="ICBM" content="34.151808, -118.24637" />

- Language Tags – Ensure you are using appropriate language tags. W3C recommends the use of an HTML attribute instead of the old method of using a meta entry.

- Multiple Locations – Multi-regional and multilingual sites should reflect their versatility with easy to discern navigational elements, multi-regional meta tags, and text to help visitors get to the part of the site most appropriate for them.

- Hreflang Attribute – HTML markup using the hreflang attribute should be utilized to allow browsers and search engines to control for language automatically.

- Canonical Tags – Employ canonical tags to keep from being penalized for duplicate text. In situations where your website’s information needs to appear in more than one place, this coding element lets Google and other search engines know you are not trying to game the system.

Code Example:

<link href="http://www.example.com" rel="canonical" />

- Charset – Set your meta content type, also referred to as the charset attribute. This allows browsers and web crawlers to use the correct encoding to interpret and display the text on your site.

- Auto-Translate – You can use the meta value “NoTranslate” to keep Google from translating your content into other languages by adding a meta value for Google to each page or by adding class attributes to individual elements “class=NoTranslate”. Translated text has the potential to cause duplicate content issues and Google advises against using automatically generated content.

- Meta-Refresh – Though it is a tool that is available, the meta refresh function, which automatically refreshes a browser on a set interval and can even redirect visitors to different URLs, should not be used. Unexpected page refreshes or changes can be disconcerting and can negatively affect the user experience.

Robots Meta Tags

- Noindex – This is used to tell a web crawler not to index a specific web page. You would include this for all pages that you do not want showing up in SERPs.

Code Example:

<html> <head> <meta name="robots" content="noindex" /> </head>

More information about Robots Meta Tags and how to use them here.

- Nofollow Meta Tags – The nofollow meta tag is used on pages with links that should not be used in determining link value. Adding this to your directory or part of your directory has a blanket effect on all links within the specified pages.

Code Example:

<html> <head> <meta name="robots" content="noindex, nofollow" > </head>

- Nosnippet – The attribute known as nosnippet is a simple way to have part of a webpage excluded from search engine entries. It also keeps cached versions of your pages from being displayed within results.

- Noodp – Noodp is a tool that can be used to keep descriptions housed in the Open Directory Project from showing up in search results pages. It can be used to increase the likelihood that your meta-description will be used instead.

- Noarchive – This meta tag is used to keep web crawlers from indexing and storing a copy of your pages. This is commonly used for pages that have data that is time sensitive as well as for other pages for which you would not want a cached version being available.

- Unavailable_after:[date]: – When you want to have part of a directory available, but you want to limit access to a specific date, you can use the Unavailable_after tag. It tells web spiders not to index information once that date has hit.

- Noimageindex – If you happen to have graphics on your page that you do not want to be indexed by Google, the Noimageindex meta tag is how you do it. Doing this only stops direct indexing, though, and the use of a disallow in your robots.txt should be used if you want to halt indirect indexing from more obscure crawlers.

- None – The none tag instructs spiders to exclude a page from SERPs. It combines nofollow and noindex into a single logical entry rather than two.

2. Rich Snippets

Rich Snippets provide behind-the-scenes information for page content. There are several ways that additional, contextual information can be added to a webpage. Adding information in the form of microdata, microformats, or resource descriptions to your page’s HTML is the most effective way to go about adding this type of information.

The addition of microdata can also be accomplished with Google’s Data Highlighter Tool. Though Highlighter facilitates a simple, streamlined addition of microdata, it uses a scaled-down version of Schema’s markup.

Additionally, the use of Google Data Highlighter results in the addition of microdata that only Google can see, which tends to make other options preferable for those familiar with this type of markup.

- Microdata – Generally associated with Schema.org’s organizational model, microdata is used to add information to be used by search algorithms. Google and Bing use this markup to understand what the page is about, and it is the recommended standard to use for rich snippets.

- Microformats – Microformats work very similarly to microdata in that they facilitate the semantic web. Standards are spread out over several development groups including Hcard and Hcalendar, used for business and event data, respectively.

- Google Data Highlighter – Data Highlighter is a simple, streamlined method for adding Google-readable snippets to a webpage. Bing and Yahoo cannot see it, but it reduces the technical requirements for adding this type of markup to a page.

- RDFa – Resource Description Framework adds information to be used by the semantic web for SERP placement in the form of attributes. This method of adding rich snippets is simpler to use from a logical standpoint than all other options save Data Highlighter.

3. Keywords

Despite a reduction in the impact on organic search engine indexing in recent history, keywords remain one of the most important elements of content development. While keyword stuffing can result in penalties in the SERPs, targeting the correct ones and using them naturally can bring engaged traffic with high conversion rates.

- Keyword Research and Planning – Picking the right keywords requires planning and forethought. Google, Bing, and social media sites like Facebook provide search data to provide insight into query frequency and advertising competition, which can act as a guide for targeting the keywords with the highest return on investment.

- On-Page Targeting – On-page targeting is what you are doing when you include keywords or semantic phrases within your text. Search engines take unspammy repetition in terms as an indicator of the overall content of a page.

- Keyword Density – Keyword density is not an important factor anymore, just keep writing in a natural tone and do not force anything in the content.

- Keyword Cannibalization – Cannibalization occurs when you repeat the same keyword or phrase across multiple pages, which reduces the likelihood that Google can discern subtle differences between them. Instead, keywords should be tailored to each page explicitly so the differences between them are clear for SERP ranking.

- Keyword Stuffing – Do not load pages with irrelevant and spammy keywords. All your text should read naturally and undue word/phrase repetition should be avoided.

- Location Keywords – Including location-specific keywords in your content can let search engines know you are especially interested in traffic from a specific area. Including place names and similar terms also provides a textual basis for integrating rich snippets, which add even more ranking potential when users are looking for a provider in the area you are targeting. This being said, keep your location keywords natural!

- Use Synonyms When Targeting a Keyword – Not only should you target a specific keyword; you should also be using common synonyms or related terms. This ensures that slight word choice differences do not inadvertently influence organic rankings.

4. Content

- Content Planning – You should have an explicit plan for content development that meets the needs of your consumer. Providing useful content is the most surefire way to drive high conversion, organic traffic.

- Heading Tags (H1, H2, H3, H4, H5, and H6) – Headings are used by Google and other search engines as a context for searches. We recommend having one H1 per page, however, HTML5 allows us to use more than one. It is a good idea to have keyword reach headings, only if they are natural! If you are interested in learning more about how to effectively use heading tags, please read our article about 4 Killer Headline Types and Strategies to Boost Your Content Marketing.

- Heading Tags’ length – While there is no hard rule regarding character count for headings, you should keep them as short as possible. Long heading dilute keyword impact on ranking, so keeping it as brief and informative as possible is important.

- Strong Tags – Bold and italics signal to web crawlers that information is important on a page. In addition to the SEO value of using this method of drawing attention to the important text, it also adds usability and makes your text easier to scan.

- Content Strategy – Maintaining a strategic focus for your content is important. Before planning, you should establish guiding principles such as mission or vision statements to help maintain standards important to you and your consumer.

- Automatically Generated Content – You should always use original, helpful, and unique content that was developed specifically for your targeted market segments. Google’s quality guidelines forbid automatically generated content, and using it can result in penalties that can hurt your SERP ranking.

- On-page Site Branding – Remember that the purpose of a web portal is to draw visitors and make them come back. Leveraging the graphics and layout of your site to help build your brand in the minds of those that visit should be central to your planning and development.

- Printer Friendly Pages – Your consumers may need information you have provided while they are offline, which makes offering printer-friendly versions of your pages a must. To avoid problems with duplicate content, these pages should be excluded in your robots.txt file to avoid being penalized by Google or other search providers, or you can take advantage of canonical tags.

- Landing Pages – Your products or services should be outlined on a landing page. This provides clearly organized information, which when done correctly, can aid your consumers in making a purchase decision.

- Provide Solutions – Create useful tools, software, and interactive elements that others will want to link to. This helps to position you as a leader and problem-solver in your industry and can aid in building your brand in the hearts and minds of prospective clients.

- Lists – Ordered, unordered, and definition lists all provide highly scannable and keyword-rich content. Usability and SEO both benefit from their use, which can equate to higher organic traffic and repeat visitors.

- Link Bait – Your content should be on topics and in a format that makes them easy to share or link. Leveraging this tactic can result in direct, high conversion traffic.

- Content Uniqueness – You should generally try to avoid having duplicate content on your site. While things like phone numbers and addresses may have to be repeated, the vast majority of your text should be different than other content, either on your site or elsewhere on the web. Make your website/content stand out from others with compelling graphics, useful content, and an interface that requires as little effort as possible to use. Standing out among your peers means your brand is easier to remember.

- Spelling, Grammar, and Punctuation – In a world with spell-check on virtually every text-based interface available, there is no excuse for breaking language rules. Errors can negatively influence usability and perceptions, so you should make sure everything is well polished and edited for your intended audience.

- Contextual Links – The links that are within the main content of a website are more valuable for SEO then footer links. These links should always be helpful, use non-spammy anchor text, and be used discriminately to avoid distraction from the text.

- Keep It Fresh and Varied – Do not create too many pages about the same subject or keyword, because it can lead to keyword cannibalization and your site may appear spammy. Each page should be optimized for a different keyword. For example, your homepage could be optimized for “sunglasses” and your category pages could be optimized for “men’s sunglasses” or “Polarized sunglasses.”

- Blogs and Podcasts – Both blogs and podcasts provide areas of engagement with your consumers. These lines of communication are easily shared with others with similar interests, which can drive direct traffic to your site and help position you as an industry leader or expert.

- Paginated Content – It is common to use pagination to split up content from long articles, or simply to spread the content out across multiple pages. It makes reading much easier, but you should always provide easy to understand navigation tools such as next/back buttons and breadcrumbs.

- Alt Tags – In addition to the accessibility benefits of alt tags (alternative text) on images, they can also affect SERP placement. Since crawlers cannot see images as the human eye does, they rely on webmasters to provide textual information for context, which is included in those tags. Alt tags are also useful for blind and/or visually impaired people to explain the image content.

- Image Title Tags – Title tags will provide the human reader and the search bots extra information about the image/video. They are also good for your site’s usability.

- Content is King – Increasing the size of your site with ever-green quality pages is the only long-term strategy for driving traffic to your site. Wikipedia is an excellent example since it ranks number 1 for so many keywords.

- Social Media Integration – Taking social media into account in your design opens up many avenues of communication. It has never been easier to broadcast a message or have your brand spread by digital word of mouth. Consider using share buttons and badges.

- Favicons – Creating your own favicon is an excellent way to improve brand visibility and recognizability. Anyone that bookmarks your site will see the icon every time they click through, which can make remembering your brand much easier.

- Ad Density – While it is acceptable to advertise related items and services on your site, it should not be overdone. Ads should be kept to a minimum to avoid negative reactions from visitors as well as search engines, which use ad density as a ranking metric.

5. Internal Links

If you would like to read about external links, please read our checklist on off-site optimization.

- Building Internal Links – You should link to other pages on your website that are closely related to the topic to provide more information. Internal linking can boost your rankings on the search engines and provide extra information to your readers.

- Internal Link Density – Keep linking where it makes sense but we recommend 100 links or less per page, this is ideal except when it comes to sitemaps or directory sites.

- Anchor Text – The text you display in links is just as important as the links themselves. It should be relevant to the URL it leads to and should add context about why a user might want to click on it.

- Link Titles – You should always utilize link titles. Though they are not used as an SEO ranking metric, they add usability and can increase your click-through rate.

Code Example:

<a href="http://www.apple.com/mac/" title="Apple's Mac computers" >Mac Computers</a>

- No Follow links – The nofollow attribute was initially instituted to address link spam and is commonly applied to paid links, so Google and other providers can easily identify them. Using this tag is more tedious than using the robots.txt version, but it is ideal when you have a mix of follow and nofollow links on a page.

Code Example:

<a rel="nofollow" href="http://www.example.com/">Anchor Text</a>

6. Usability and Technical

- Usability First– When creating content, think about your human visitors before search engines. Algorithms are not perfect, but they are trying to be, and focusing on usability means that search engine updates will help, not penalize you.

- Staying Approachable – Follow all current usability and accessibility guidelines. Great websites that can help you with this include www.usability.gov and www.w3.org.

- User Experience – Your interface should include an organized and user-friendly navigation system. If your visitors have to work to figure out how to get around the place, they are less likely to convert.

The Golden Rule of SEO: Build your website for users, not spiders! – Emin Sinanyan

- HTML Sitemaps – Large sites should create a sitemap.html page for your users. This provides an easy-to-understand directory that details the content of your site. Apple’s HTML site map is a great example, click to see.

- XML Sitemaps – You should also create a sitemap.xml for bots. This makes it easier for search spiders to crawl and index your site, detect new and refreshed content.

Code Example:

User-agent: * Disallow: /personal-data.html

- Breadcrumb Navigations – Breadcrumbs are text-based links on a page that help users navigate your website. In addition to the usability that they add for visitors, search engines use them for SERP results and to better understand your navigational structure.

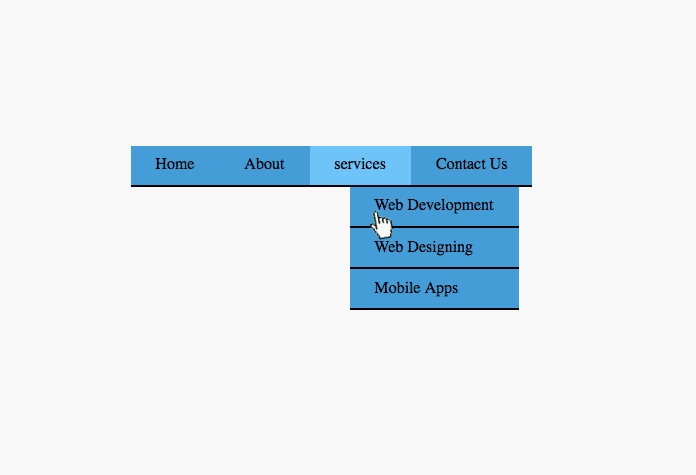

- Navigation Organization – You should utilize multiple sets of navigation controls. For large sites, you can implement drop-down navigation menus or mega-menus, which can make the links easier to understand and use.

- .htaccess File – The .htaccess file is used to control access to your website. It is used to establish redirects, set custom 404 pages, boost site speed with compression, control access via IP, handle encryption, set default directory pages, and apply canonical tags to PDF and other image files, which can help you clean up what appear to be duplicate content issues due to website structure.

Redirects

Depending on how a visitor arrives at your site, they might encounter a URL with or without the www, which can lead to duplicate content issues. This can be accomplished either with domain forwarding through your web host or through your .htaccess file.

Do not trick your users when redirecting a page. Moving your site temporarily or permanently can make redirects necessary, but they should never be used solely for search engine ranking.

- Standardized Default Page – It is always a best practice to make navigation simple and standard. Redirecting all traffic to a simple example.com instead of example.com/index.html makes it easier for humans to read and also ensures there will be no duplicate content problems.

- 301 Redirection – This is a redirect used when you have permanently moved a URL, and it tells the search engines to automatically redirect them to a new location. It is the recommended way to move a site, and it informs Google to not penalize you for duplicate content in the transition. Below is an example of how to redirect an old URL to a new one using .htaccess file.

Code Example:

Here is how you could 301/302 redirect an old URL to a new one in your .htaccess file.

Redirect 301 /about-us.html http://www.example.com/about.html

- 302 redirection – Very similar to a 301, 302 redirects to an alternate web address. However, a 302 is intended specifically for temporary or non-permanent moves.

- Server Errors – Check your Google Search Console and Bing Webmaster Tools regularly, and make sure you do not have any server errors. Fix every single error ASAP.

- Hosting and Server Speed – Make sure to have a good and faster hosting provider. A slow site can elicit SERP penalties and can have negative effects on usability.

- Website Speed Optimization – You should work on your site speed to optimize each page. Gtmetirx.com is an excellent resource for testing, and you should aim for an A score. Keep in mind that site speed is one of Google’s ranking factors!

- File Size – It is important to remember that not everyone has access to blazing-fast internet all the time. Your pages should either be under 100 KB in size or should be compressed to ensure they are indexed completely. We’d also like to note that file size is NOT a ranking factor, it is just recommended for faster page loading.

- Proper Coding – Make sure your website adheres to CSS/HTML validation standards and that you have no errors or warnings. Though it is not currently a ranking factor for Google, it has been suggested that it might be part of future algorithm updates.

- Deceptive Cloaking – Cloaking is when you hide your content from crawlers by providing alternative pages meant to manipulate SERP ranking. It can drive search traffic to your site for all the wrong reasons, causing your conversion rates to dip. Cloaking is against Google’s and other search engines’ Quality Guidelines. Avoid at all times!

- Hidden Text. Honesty is the Best Policy – Do not leverage hidden text or links to drive traffic. Though it may result in users arriving at your intended destination, underhanded tactics can hurt your brand’s reputation, can lead to negative user experiences, and can result in SERP penalization.

- Doorway Pages – You should avoid the use of doorway pages. Outside of sitemap landing pages for larger sites, all sections of your site should be content-rich and focus on aiding the consumer rather than driving traffic elsewhere.

- Security – Make sure your website does not have viruses, malware, or Trojan horses. Not only can this affect your SERP ranking, but users that end up with a digital infection from your site are also less likely to return.

- Broken Links – Links are there to add context and more in-depth information for crawlers and humans alike. Broken ones are sure to be, at the very least, frustrating to any site visitor that clicks on them or otherwise attempts to use them. Check and fix broken links regularly!

- Over-optimization – Do not over optimize pages for search engines. Doing so can actually have a negative effect on your SERP ranking.

- URL Format – Optimize your URLs for keyword content as well as length. Both humans and web crawlers use your web address for context about your site, and designing your structure with this in mind can make sure it serves both equally as well.

- Dynamic Pages – Dynamic pages using “?’s”, are not crawled by every search engine. They should be used sparingly, and the addresses kept simple when they must be used.

- Frames and Flash – Search engines do not like frames and flash technology, and they should be avoided. The use of frames and flash can lead to a situation that makes it difficult or impossible for crawlers to see the text on your site, which can have an obvious effect on ranking. Another disadvantage of using flash is that iPhones, iPads, and iPods cannot read flash.

- Mobile Optimization – Now that Google switched to a mobile-first search engine it is very important to make sure your website is mobile-friendly. Mobiel-friendly websites get an extra boost in rankings and improve bounce rate and user experience.

- Browser Check – Also, make sure your site displays correctly on all major browsers, including mobile. The game is to drive conversions, and a site that does not render correctly on any browser represents a missed market segment.

- Google Analytics – Adding tracking code to your pages is a great way to keep up with the effects of your content development. Using this tool can provide demographic, usage, ranking and geographic data that you can use to improve how you serve them.